From Writers Write:

AI can now generate stories and scammers are already using the tool to try and get published. Editors don’t want to receive these subpar AI-generated submissions. Clarkesworld Magazine is among publications getting bombed by this new form of submission spam that is a result of the new ChatGPT AI tool.

Neil Clarke, Editor of Clarkesworld, announced that the science fiction magazine is temporarily closing its doors to submissions after receiving a large amount of submissions clearly created with the help of ChatGPT. He explains the decision in a series of tweets.

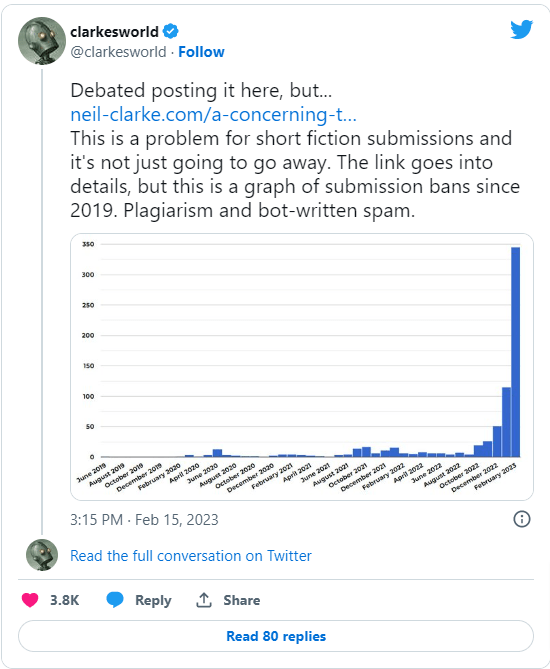

Clarke also shared a graph that shows a massive increase in submission bans since ChatGPT arrived. The magazine bans people caught plagiarizing from future submissions. They have gone from having to ban a few people a month to banning hundreds of submitters in the past couple months. Clarke calls it a concerning trend in a blog post.

Link to the rest at Writers Write

“Anybody who think chatbots can deliver mdaningful content must also think wordsmithing is all that matters in writing.”

I think ChatGPT can deliver meaningful content. It has demonstrated it. It can also provide nonsense.

Wordsmithing? I do indeed think it matters in writing, but it is hardly all that matters.

A professor’s view of chatgpt papers.

https://acoup.blog/2023/02/17/collections-on-chatgpt/

Good find.

Signature point:

“ChatGPT’s greatest limitation is that it doesn’t know anything about anything; it isn’t storing definitions of words or a sense of their meanings or connections to real world objects or facts to reference about them. ChatGPT is, in fact, incapable of knowing anything at all. The assumption so many people make is that when they ask ChatGPT a question, it ‘researches’ the answer the way we would, perhaps by checking Wikipedia for the relevant information. But ChatGPT doesn’t have ‘information’ in this sense; it has no discrete facts. To put it one way, ChatGPT does not and cannot know that “World War I started in 1914.” What it does know is that “World War I” “1914” and “start” (and its synonyms) tend to appear together in its training material, so when you ask, “when did WWI start?” it can give that answer. But it can also give absolutely nonsensical or blatantly wrong answers with exactly the same kind of confidence because the language model has no space for knowledge as we understand it; it merely has a model of the statistical relationships between how words appear in its training material.”

This is why the Bing Chat feature has a separate “grounding” operator (PROMETHEUS) feeding real time web search data to the chat *interface*. Therein lies the meaning behind the bot output.

To a large extent chatbots are a front end (like a CLI or a GUI or, as he says, an autocomplete/autocorrection/autocorruption keyboard app) to the real, useful app. That is the difference between the conversation chatbot and the conversational search application. Two very different products and use cases.

In reality, the chatbot is just assembling words based on use probabilities in relation to the prompt. It neither knows not cares what the meaning of the words or sentences might be. That is not its function.

Other generative apps based on the underlying tech are a different story because the relationship between the elements they manipulate is the meaning. The art tools, for one.

I haven’t heard of a generative “AI” music app but I expect that one would produce tremendously persistent earworms and catchy jingles and pop instrumentals, perhaps even “classical” music and soundtracks. Lots of money to be made there.

But in narrative text?

Nope. The same tech that assembles the text block can deconstruct it and recognize the chatbot that assembled it by recognizing the probability data that linked the words.

The words in a story are just the delivery vehicle for the meaning. The true product, the content.

Anybody who think chatbots can deliver mdaningful content must also think wordsmithing is all that matters in writing.