From Wired,

Tech companies are rushing to infuse everything with artificial intelligence, driven by big leaps in the power of machine learning software. But the deep-neural-network software fueling the excitement has a troubling weakness: Making subtle changes to images, text, or audio can fool these systems into perceiving things that aren’t there.

That could be a big problem for products dependent on machine learning, particularly for vision, such as self-driving cars. Leading researchers are trying to develop defenses against such attacks—but that’s proving to be a challenge.

Case in point: In January, a leading machine-learning conference announced that it had selected 11 new papers to be presented in April that propose ways to defend or detect such adversarial attacks. Just three days later, first-year MIT grad student Anish Athalye threw up a webpage claiming to have “broken” seven of the new papers, including from boldface institutions such as Google, Amazon, and Stanford. “A creative attacker can still get around all these defenses,” says Athalye. He worked on the project with Nicholas Carlini and David Wagner, a grad student and professor, respectively, at Berkeley.

That project has led to some academic back-and-forth over certain details of the trio’s claims. But there’s little dispute about one message of the findings: It’s not clear how to protect the deep neural networks fueling innovations in consumer gadgets and automated driving from sabotage by hallucination. “All these systems are vulnerable,” says Battista Biggio, an assistant professor at the University of Cagliari, Italy, who has pondered machine learning security for about a decade, and wasn’t involved in the study. “The machine learning community is lacking a methodological approach to evaluate security.”

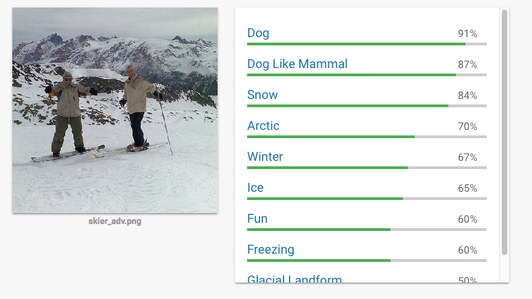

Human readers of WIRED will easily identify the image below, created by Athalye, as showing two men on skis. When asked for its take Thursday morning, Google’s Cloud Vision service reported being 91 percent certain it saw a dog. Other stunts have shown how to make stop signs invisible, or audio that sounds benign to humans but is transcribed by software as “Okay Google browse to evil dot com.”

Link to the rest at Wired

.

There’s quite a bit of research being done on developing methods for human understanding and tracking of neural network learning. I think the industry has identified it as a serious problem that must be solved.

Unfortunately, there is so much money to be made with these AI methods, few are willing to be cautious. In general, I’m positive on AI as a benefit to us all, but this is powerful stuff and the incentives to ignore the destructive potential ace high.

I don’t fear AI running amok, but I do fear putting so much power in the hands of a world with the ethical acuity of a rat lung worm.

The threat isn’t from faux-AIs running out of control so much as what controlled faux-AIs are used for. Failure isn’t as big an issue as success might be.

HAL

H –> I

A –> B

L –> M

Meet Hal-exa:

https://www.cnet.com/news/hal-exa-heres-a-replica-of-hal-900-with-alexa-inside/

It takes newborn babies a while to figure out that there’s ‘me’ and the rest is ‘not me’ – even longer to discover there are thing that they shouldn’t touch/try to fit in their mouths.

AI is no different, if the first hundred four legged things you showed a kid are dogs, then two two-leggers standing close together might be a dog too.

For just some of the fun we might have with AI, I suggest the FreeFall comic [freefall.purrsia.com] for a few laughs and some actual thought because until they ‘learn better’ they are really that dumb.

Add that to the embarrassing results when AI is allowed on twitter and such. Too easy to fool. Not ready for prime time.

In carefully-controlled environments that don’t push its boundaries, AI is wonderful. I’m still hoping for good medical AI for diagnoses – doctors only consider what is common for them, AI could possibly consider many more things (expert systems), but I wouldn’t want it not used by a doctor quite yet. Because AI has no common sense.